PM Lee urges vigilance against deepfakes after 'completely bogus' video of him emerges

In the deepfake video, Prime Minister Lee Hsien Loong discusses an investment opportunity purportedly approved by the Singapore government.

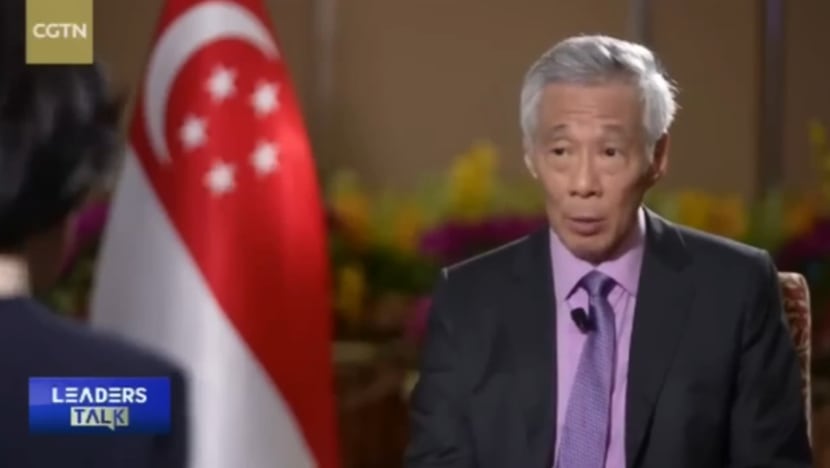

A screengrab from a deepfake video of Prime Minister Lee Hsien Loong promoting an investment scam.

This audio is generated by an AI tool.

SINGAPORE: A video purportedly showing Prime Minister Lee Hsien Loong promoting an investment product has emerged online, underscoring the pitfalls of artificial intelligence and its use to spread misinformation.

Mr Lee on Friday (Dec 29) asked members of the public not to respond to scam videos promising guaranteed returns on investments or giveaways.

"(Scammers) transform real footage of us taken from official events into very convincing but completely bogus videos of us purporting to say things that we have never said," he wrote in a Facebook post.

Mr Lee added that the use of deepfake technology to spread disinformation "will continue to grow".

"We must remain vigilant and learn to protect ourselves and our loved ones against such scams."

In the altered video, Mr Lee is seen being interviewed by a presenter from Chinese news network CGTN. They discuss an investment opportunity purportedly approved by the Singapore government, referring to it as a "revolutionary investment platform designed by Elon Musk".

The video ends with the presenter urging viewers to click on a link to register for the platform, to earn "passive income".

The deepfake video appears to have been manipulated from CGTN's actual interview with Mr Lee in Singapore in March.

Deepfakes are created using AI techniques to alter or manipulate visual and audio content.

"There is a noticeable increase in the malicious use of deepfakes across societies," said Assistant Professor Saifuddin Ahmed from the Nanyang Technological University's (NTU) Wee Kim Wee School of Communication and Information.

"Deepfake creators can now target not only high-profile individuals like celebrities and politicians but also ordinary citizens, particularly in the context of financial scams."

Asst Prof Ahmed told CNA on Thursday that a growing prevalence of deepfakes featuring political leaders is expected.

"Predominantly, malicious actors are poised to employ such deepfakes for political subversion, aiming to manipulate public opinion, create deceptive narratives and erode trust in political institutions," he added.

"Similar to deepfakes involving celebrities, those featuring political figures may also be utilised in financial scams."

US President Joe Biden, for instance, is a regular target of deepfakes.

In one video in October, a fabricated clip of Biden appearing to announce a military draft resurfaced amid the Israel-Hamas war.

In 2022, a deepfake video of Ukrainian President Volodymyr Zelenskyy emerged where the altered image called on his soldiers to lay down their weapons.

DEEPFAKES A "SIGNIFICANT CONCERN"

Deepfakes, including those of politicians, are a "significant concern", said Asst Prof Ahmed.

"A substantial portion of the general population exhibits a limited awareness of deepfakes and tends to overestimate their ability to discern authentic content from manipulated media," he continued.

"For instance, in Singapore, among those who are aware of deepfakes, a significant third inadvertently shared a deepfake, only realising later that the content they shared was not an authentic video on social media."

There is therefore a pressing need for increased awareness and education on deepfakes, he added.

When contacted about the latest deepfake video involving Mr Lee, the Prime Minister's Office pointed to his Facebook post in July after a rise in online advertisements misusing his image to sell investment opportunities.

"If the ad uses my image to sell you a product, asks you to invest in some scheme, or even uses my voice to tell you to send money, it's not me," Mr Lee said on Jul 22.

On Wednesday, The Straits Times reported about a deepfake video of Deputy Prime Minster Lawrence Wong purportedly promoting investment products.

The video modified Straits Times footage of Mr Wong and featured the news platform's logo.

"There are deepfakes of me endorsing commercial products, and also misinformation circulating on various networks that the government is looking to reinstate a circuit breaker," Mr Wong said in a Facebook post on Dec 11.

"These are all falsehoods."

HOW TO SPOT A DEEPFAKE

Deepfakes in Singapore have jumped five times in the last year alone, according to findings in the Sumsub Identity Fraud Report 2023.

The quality of deepfaked videos tends to suffer and become blurry as a result of attempts to hide manipulation.

Viewers can also look out for visual and audio anomalies in the form of atypical facial movements or blinking patterns and noticeable edits around the face, said Trend Micro's Singapore country manager David Ng.

Viewers should also look closely to see if the audio matches lip movements.

Scammers tend to rely on scripts they have generated themselves to create deepfake videos and audio, which might expose their poor use of language, said Satnam Narang, a senior staff research engineer at cybersecurity firm Tenable.

When encountering a deepfaked or suspicious video on YouTube and Facebook, you can take steps to report it to the platform.

YouTube has said it relies on a combination of people and technology to enforce its community guidelines. “The YouTube community plays an important role in flagging content they think is inappropriate,” it said.

Doing so would allow reviewers to evaluate flagged videos, and those that a trained reviewer deems a policy violation would be removed.