IN FOCUS: 'At the click of a button' – the scourge of deepfake AI porn

Some Singapore MPs were among dozens of victims to receive letters that had images of their faces superimposed on obscene pictures. The number of people potentially at risk of image-based sexual abuse increases exponentially with the advent of generative AI, says a women's rights group.

There has been an "explosion of deepfakes" in recent years, says Ms Sugidha Nithiananthan, a director at women's rights group AWARE. (Illustration: CNA/Clara Ho)

This audio is generated by an AI tool.

SINGAPORE: The letters were sent to their workplaces – in them, their face superimposed onto an obscene photo of a man and a woman in an “intimate and compromising” position.

Contact this email address, or else, the letter threatened.

Those who contacted the email address were ordered to transfer money to a bank account, or compromising photographs and videos would be leaked and exposed on social media.

One 50-year-old victim transferred S$20,000 (US$14,700).

Victims of deepfake porn can feel helpless and powerless, said Dr Hanita Assudani, a clinical psychologist at Alliance Counselling.

“What has happened is somebody has used something that is part of me – my face, my pictures, my features – without my consent,” she added.

“The intrusiveness is present. It depends on each individual, but the level of intrusiveness, the shock, the humiliation, the shame, and the social consequences of it can be very similar to somebody who experienced a sexual assault.”

Some victims feel unsafe as their trust was broken, she said.

“I’m definitely concerned about anything that will take away consent and power from individuals, violate people’s boundaries,” said Dr Hanita.

There has been an “explosion of deepfakes” in recent years, said Ms Sugidha Nithiananthan, director of advocacy and research communications at the Association of Women for Action and Research (AWARE).

She pointed to a report by identity verification platform Sumsub that said the Asia Pacific region has seen a 1,530 per cent increase in deepfakes from 2022 to 2023.

“Mirroring trends in sexual violence, the non-consensual creation of sexually explicit material with deepfake technology is largely targeted at women,” she added.

But it is not just low-quality, black-and-white photos printed on paper that are a concern. Once online, these deepfake images travel quickly and wide, being replicated on websites and platforms.

A quick search on Google by CNA showed multiple results, including one site that offered to fulfil requests to generate deepfake porn images of local celebrities and influencers.

Superstar Taylor Swift, social media influencer Bobby Althoff and actress Natalie Portman are among celebrities who have reportedly been victims of deepfake AI porn.

In September last year, a man in South Korea was sentenced to more than two years in prison for using AI to create 360 virtual child abuse images.

Some people who view such pornographic material eventually think about creating them. A report by Home Security Heroes, a US company focused on preventing identity theft, found that deepfake pornography makes up 98 per cent of all deepfake videos online.

About 48 per cent of the 1,522 men surveyed had viewed deepfake pornography at least once, with “technological curiosity” being claimed as the top reason for doing so.

Twenty per cent of respondents considered learning how to create deepfakes.

“One in 10 respondents admitted to attempting the creation of deepfake pornography featuring either celebrities or individuals known to them,” the report said.

CREATING A DEEPFAKE "WITHIN MINUTES"

Between March and mid-April, the police received more than 70 reports of victims receiving extortion letters with manipulated obscene photos.

Among the victims were Foreign Affairs Minister Vivian Balakrishnan and Members of Parliament Tan Wu Meng, Edward Chia and Yip Hon Weng.

“With advancements to photo and video editing technology, including the use of Artificial Intelligence (AI) powered tools, manipulated photographs and videos may increasingly be used for extortion,” the police said in April.

Most of the victims’ photographs appeared to have been obtained from public sources, said the police.

Mr Chia warned that with readily available tools, “anyone can create deepfake content within minutes”.

"This can pose a significant threat to our social fabric. Unchecked, this can affect our public standing and those we love.”

The number of people potentially at risk of image-based sexual abuse increases exponentially with the advent of generative AI, said AWARE’s Ms Sugidha.

“Anyone who has had a photo of them posted online could be a possible victim.

“This makes detecting deepfakes particularly challenging, especially for individuals who have never shared intimate images and may not be actively looking out for such misuse, thus allowing perpetrators to evade detection,” she added.

Associate Professor Terence Sim of the National University of Singapore’s Centre for Trusted Internet and Community said AI-generated porn is “certainly a very pressing issue”.

“The bad guys are really coming up with ways to exploit this and then scam people,” he said.

“Various kinds (of people) - celebrities, politicians, even your normal man on the street. Ordinary folks like you and me.”

There is a range of tools that can be used to generate obscene photos and videos of varying quality.

It is possible to create a realistic deepfake video using just one high-resolution picture of the victim and a video that you want to superimpose the victim’s face onto, sometimes known as a “driving video”.

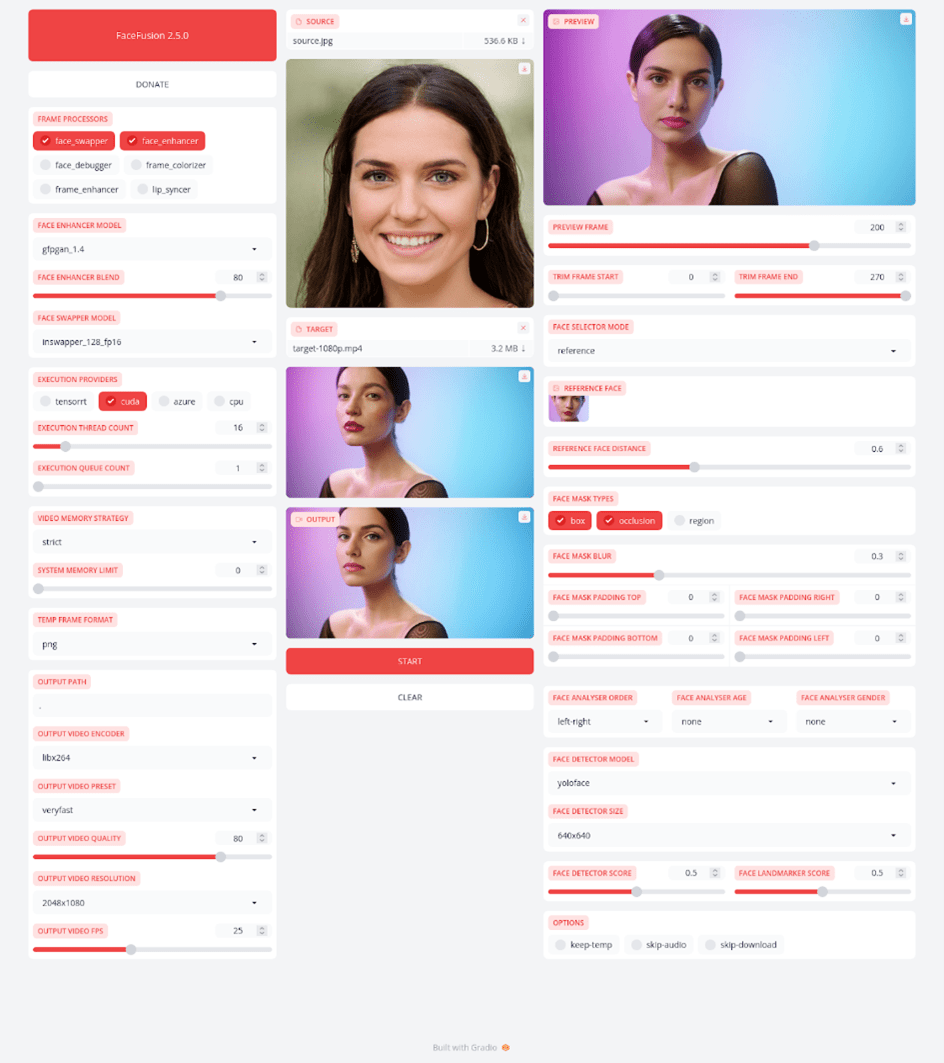

In a Talking Point episode, Prof Sim showed how he used AI-powered face swapping tool FaceFusion to create a deepfake of host Steven Chia within minutes.

“All I need is an actor that sort of looks like the victim,” he said.

If the “driving video” shows an actor in a compromising position, it can be digitally manipulated to show the victim’s face instead of the actor’s face.

Assoc Prof Sim said that as of now, most deepfakes are of “talking heads”, which refers to a close-up that focuses on the face of a target.

“You don’t even need the full body. It’s very easy for an actor to create this.

“Imagine, I want to fake Donald Trump, I can just wear clothes like Donald Trump, put the Oval Office or US flag behind me. That’s fairly easy to do.”

Installing FaceFusion manually requires knowledge of programming language Python, and is “not for the faint hearted”, he said.

But an automatic installer can be purchased for US$20. Once installed, the user interface is quite intuitive.

Upload a picture and a video, and the AI does most of the work. There are sliders and buttons to make adjustments to the deepfake video to make it as realistic as possible.

Those that are easier to use will likely produce less realistic results, he said.

Mr Yip told CNA last month that the images in the letter were not sophisticated and low quality. It was in black and white and not well-rendered.

But it would not take long for someone to pick up the skills required to create a better deepfake, including online.

Associate Professor Cheung Ngai-Man of the Singapore University of Technology and Design (SUTD) estimated that it would take about a week to learn what is needed to create a fairly realistic deepfake.

Face-swapping does not require specialised equipment either. A computer with “more high-end” specifications would allow a user to render these images to be more realistic, he said, adding that such computers are quite accessible.

The technology is continually advancing, with real-time generation of deepfakes getting closer to reality.

In April, Microsoft unveiled its VASA-1 program, which produces “lifelike audio-driven talking faces generated in real time”.

It only needs one photo and a one-minute audio clip to generate a realistic talking head. Some other audio AI tools require much longer audio input of 10 to 15 minutes.

The accessibility and ease with which deepfakes can be created is a concern for AI experts.

“The truth is that these kind of tricks or blackmailing threats have been there ever since Photoshop came about, in fact maybe even earlier,” said SUTD’s Assistant Professor Roy Lee.

“What is getting us worried is how easy (it is). Last time you might need to be a Photoshop expert to basically do it right and make it look realistic.

“Right now, it’s just at the click of a button … it’s making things easier and it’s making things more realistic because generative AI is improving its performance,” he said.

Add the fact that photos and videos are disseminated far and wide on the internet, and the concern becomes greater.

“With all the positive things that come with the internet and generative AI, there is always a flipside,” he said. “If you’re abusing it then it’s more widespread, it’s more realistic.”

REMOVE ALL PHOTOS FROM SOCIAL MEDIA?

How can people protect against their faces being used in deepfake porn?

“Remove all your stuff from social media,” said NUS’ Assoc Prof Sim. “That’s certainly one way.”

But he acknowledged that it was not something everyone could do. For some people – such as celebrities, influencers or small business owners – social media is their livelihood.

Asst Prof Lee said that as people become more aware of deepfakes, they may decide to stay off social media and not post pictures or information about themselves.

Some of his friends who are more conscious and tech savvy have already done so. They also steer clear of using their faces as their WhatsApp or Telegram profile pictures.

Others are willing to accept the risk of posting their pictures online.

“It’s an individual choice,” said Asst Prof Lee.

The group he is concerned about are those who post their pictures online without being aware of the risks that come with generative AI.

But as society becomes more educated on AI, people may realise how common deepfakes are.

“I believe that people will get smarter and … if there’s a video circulating of a friend in a compromised position, no one will believe that,” he said.

“If you reach that stage as a society, you become more aware of information verification, be more savvy about how information is created and all - then there is less worry.”

Experts are also working on improving the detection of deepfakes. One way to do so is for people to use “invisible watermarks” on photos and videos.

If the video or photo has been tampered with, analysis will reveal that the watermark has been broken and the contents are not to be trusted.

While this could work to help protect trusted media entities, it is less useful in the context of AI-generated porn since people are less likely to watermark their personal photos.

CAN THE LAW KEEP UP?

Mr Cory Wong, director at Invictus Law Corporation, said there is no law at the moment that is specific to AI.

“It’s still always criminalising the human aspect of it, like what you did with that AI-generated image.”

Clicking on the “generate” button would not be an offence, he said. But if the image generated is offensive, then possessing it would be an offence, said Mr Wong.

“You don’t just generate it in a vacuum,” he said. “You generate it and then you store it somewhere.”

People who possess obscene materials can be jailed for up to three months, fined, or both. Those caught transmitting obscene material face the same penalties.

Mr Chooi Jing Yen, a criminal lawyer at Eugene Thuraisingam LLP, said possession of an obscene deepfake video could also be an offence under the Films Act.

Offenders can be fined up to S$20,000 (US$15,000), jailed for up to six months, or both.

He also said that obscene materials do not necessarily need to be human. "I don’t think that the mere fact (that) it’s AI-created means that it can’t be (obscene)," he added.

Depending on the specifics of the case, there could be other charges.

“A single act can attract a charge under extortion, it can also attract a charge under Section 377BA - it’s not specific to AI but it’s an offence or insulting somebody’s modesty by showing them a picture,” said Mr Chooi.

“The offence could also be under the Protection of Harassment Act, so there are a few routes to it. But extortion is probably the harshest one because it does carry a mandatory minimum prison sentence.”

Laws specific to deepfake AI pornography will soon be introduced in other countries.

Australia’s federal government announced in May that it will introduce legislation to ban the creation and non-consensual distribution of deepfake pornography.

The UK’s Ministry of Justice also announced in April a new law that criminalises the creation of sexually explicit deepfakes without consent.

The new law also means that if the creator has no intent to share it but wants to “cause alarm, humiliation or distress to the victim”, they will also be prosecuted.

Asked if there is a need to develop legislation for AI-related crimes in Singapore, Mr Chooi said that the technology is still evolving.

“You wouldn’t want to be passing laws every month or every few years because the space moves very quickly. You end up with a very piecemeal kind of situation and people are also all confused,” he said.

Mr Wong said that having specific laws might be good, but there may be too many things to cover.

“It’s really the use of AI or the misuse of AI that is the problem, and I think it’s very difficult to try to legislate every single thing,” he said.

There is also the potential problem of under-legislation, where the law is too wide.

“And then you realise that actually (the) law is a bit defective,” he said.

“Right now, with the existing (laws) - whether it’s Penal Code, whether it’s Miscellaneous Offences Act - they are sufficiently wide to capture all this, then maybe give it a bit more time to see how we will actually criminalise AI-related offences.”

If there is some AI misuse not captured within Singapore's current legislation, that would be the tipping point to push for more legislation, Mr Wong added.

Another scenario would be where the maximum sentences under existing provisions do not seem proportionate to the crime, according to Mr Chooi. In such a case, lawmakers may need to look into introducing new laws.

“I think generally that requires a certain number of such cases to surface first,” he said.

“When we punish people, and people are prosecuted and sentenced, one of the aims is general deterrence, which is to send a message to the public,” Mr Chooi added.

“If it’s not that prevalent then there’s no strong reason to do that.”

In response to CNA's queries, the Attorney-General’s Chambers said it has not prosecuted cases related to AI-generated pornography in Singapore.

“Such offences are relatively new, made possible by the recent evolution of AI technology,” a spokesperson said.

Although people are certainly generating obscene images, the lawyers said they have not personally encountered cases where someone is charged with doing so.

When asked why this is the case, Mr Wong said it first needs to be reported to the authorities. He gave the example of someone superimposing a face onto a naked body.

“Let’s say I don’t even know about it, or if anyone who sees it knows this is probably fake, then they may not take that first step of reporting it,” he said.

A HEAVY EMOTIONAL TOLL

Last year, a TikTok user named Rachel opened up about her harrowing experience of people using AI tools to generate fake nude images of her, before sending these pictures to her and other online users.

She had received a direct message on Instagram containing fake nude images of her. The anonymous user had used photos on her public profile and ran them through a “deepfake AI program” to create the obscene pictures.

In a TikTok video, she talked about receiving the message and said she had ignored it.

She then received dozens of messages containing AI-generated nudes of herself, this time from other users.

In a later video, she can be seen crying and asking her abusers to stop creating such images, calling them “rapists”.

When CNA searched for her TikTok username on Google, she appeared to have taken down her account. Several porn websites also appear on the first page of the results.

Dr Hanita of Alliance Counselling said that when someone’s photo has been misused, they may not know whether a crime has been committed and whether the police can help them.

People around them may not be able to empathise or relate.

“Then you start thinking, is what I’m going through real? Is it a big deal? Because the crime feels very distant, they take my photo and use it another way. Is it real?

“But I will say it’s very real .... I think the person may not know how to go about reporting the crime, what else to do.”

Some victims develop symptoms of post-traumatic stress disorder, anxiety or depression.

The police told CNA that victim care officers, who have received training from police psychologists, can provide emotional and practical support to those who have been assessed to need it.

Dr Hanita said some victims may feel ashamed and want to keep things to themselves, or end up blaming themselves.

“The first thing is to remember that this is not your fault,” she said. “It’s your image and however you choose to use it, it has been used in another way.

“Even if you take a sexy photo of yourself and put it (online), you choose that this photo be used in this way – the social media way – not to be used in another way.”

Friends and family members can support victims emotionally and practically, such as by going with them to report the crime and helping to collect evidence to hand to the police.

“Don’t chide the person. Respect them, provide empathy, compassion for what they are going through,” she said. “That can be very helpful and powerful.”

She called on people to work together as well, by reporting images or accounts that seem suspicious, even if they do not know the person involved.

“As a community, that’s what we can do to step up vigilance. To know that this is not okay, it’s not funny … for somebody’s images to be used in a sexual manner, or to put them down or humiliate them.”